- TheTip.AI - AI for Business Newsletter

- Posts

- DeepSeek R2 achieves GPT-4 results with fraction of the cost

DeepSeek R2 achieves GPT-4 results with fraction of the cost

90% cheaper, same AI quality

Hey AI Enthusiast,

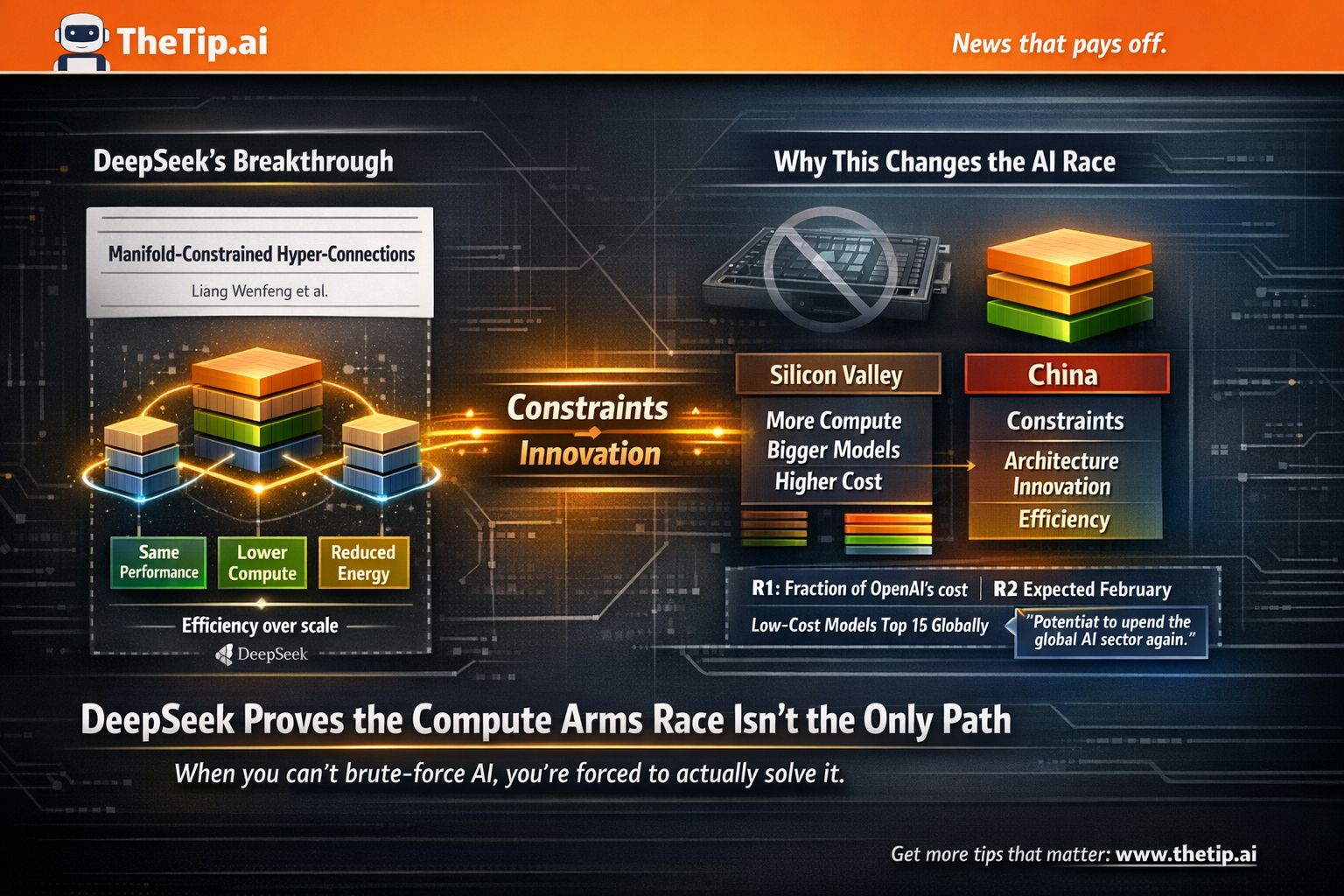

DeepSeek just published a paper that's going to make Silicon Valley uncomfortable.

They built a new AI training method that uses way less compute. Less energy. But delivers the same results.

It's called Manifold-Constrained Hyper-Connections.

The US banned China from accessing NVIDIA's best chips. Thought that would slow them down.

Instead, it forced them to get smarter.

DeepSeek's R1 model last year cost a fraction of what OpenAI spent. Now they're dropping R2 in February. Same playbook.

Build better with less.

Here's what caught my attention: When you can't brute-force a problem, you have to actually solve it.

And that's creating a completely different kind of AI innovation.

But first, today's prompt (then why doing less is about to become the biggest marketing advantage...)

🔥 Prompt of the Day 🔥

One File Management Framework: Use ChatGPT or Claude

Act as a creative operations specialist. Create one asset library system for [AGENCY] that eliminates "where's that file?" chaos.

Essential Details:

Team Size: [NUMBER OF PEOPLE]

Client Count: [ACTIVE ACCOUNTS]

Asset Types: [IMAGES/VIDEOS/COPY/GRAPHICS]

Storage Platform: [GOOGLE DRIVE/DROPBOX/DAM]

Approval Workflow: [CLIENT REVIEW PROCESS]

Retention Policy: [HOW LONG TO KEEP]

Create one organization system including:

Folder structure template

File naming convention rules

Version control system

Client approval tracking

Archive protocol (old campaigns)

Quick-access tagging system

Team permission guidelines

Find any file in 10 seconds. Keep under 200 words total.

🔮 Future Friday 🔮

The Marketing Strategy That Wins: Shut Up

Every marketing book tells you the same thing.

More touchpoints. More nudges. More personalization. More engagement.

By 2029, the brands that win will do the opposite.

They'll know when to go silent.

Not because they're lazy. Because silence becomes the highest-value action.

I'm calling it AI-Curated Silence.

And it's going to flip digital marketing on its head.

What It Actually Is

Right now, marketing automation works like this: If someone opens but doesn't click, send more reminders. If they ghost you, retarget harder. If engagement drops, increase frequency.

AI-Curated Silence does the exact opposite.

The AI watches signals:

Notification fatigue

Diminishing response rates

Delayed opens

Emotional overload patterns

"Ghost engagement" (opens with no action)

Unsubscribe risk indicators

Then it makes a decision: Don't message them. Don't notify. Don't personalize. Don't engage.

Because any interaction right now will reduce future engagement or trust.

Silence becomes a deliberate marketing output.

A Real Scenario (2027)

A user:

Opens your emails but never clicks

Scrolls but doesn't interact

Ignores push notifications

Old marketing playbook: Send more reminders. Escalate urgency. Add scarcity.

AI-Curated Silence playbook:

Stops all messaging for 21 days

Removes notifications entirely

Allows organic return

When the user comes back naturally, AI sends one relevant message. No urgency. High relevance.

Result: Higher engagement. Lower churn. Restored attention.

Why This Changes Everything

We've built an entire industry around fighting for attention.

Optimize frequency. Increase touchpoints. Never let them forget you.

But attention is finite. And every brand is exhausting it.

The brands that know when to stay quiet will stand out.

Marketing becomes attention stewardship, not attention capture.

Think about the people you trust most. They don't spam you. They show up when it matters.

That's what AI-Curated Silence enables at scale.

The New KPIs That Will Matter

Forget open rates and click-throughs.

Here's what you'll track:

Silence effectiveness rate

Re-engagement after pause

Attention recovery score

Fatigue-adjusted lifetime value

Churn prevention via suppression

You'll optimize for restraint, not reach.

The Timeline

2026: Internal suppression logic experiments. Brands test "quiet periods" manually.

2027: Silence optimization models appear. AI starts predicting optimal pause windows.

2028: "Do-Not-Engage windows" become configurable in CRM platforms. Marketers can set silence rules.

2029-2030: Silence becomes a strategic layer in martech. The brands that master it dominate retention.

The Risks

This only works if the AI is accurate.

Misinterpret silence as abandonment? You lose the customer.

Over-pause and miss real opportunities? Revenue drops.

Plus, there's a cultural shift required. Marketing teams are trained to always engage. Doing nothing feels like failure.

But the data will prove it works.

What This Means for You

If you're running email campaigns, test pause windows now. Watch what happens when you give people space.

If you're building AI systems, start tracking attention fatigue signals. Not just engagement, but over-engagement.

If you're in retention, understand that sometimes the best action is no action.

The marketers who win in 2027-2030 won't be the ones who master frequency. They'll master restraint.

Because silence, when used strategically, is the loudest signal of confidence and respect.

Did You Know?

Libraries are using AI that can predict which books will become bestsellers by analyzing the reading speed of early borrowers and how often they pause at certain passages.

🗞️ Breaking AI News 🗞️

DeepSeek Drops New Efficiency Framework

DeepSeek published a paper this week that's going to shift how we think about AI development.

It's called Manifold-Constrained Hyper-Connections. Built by founder Liang Wenfeng and 18 other researchers.

The framework improves scalability while reducing computational and energy demands.

Translation: Build better AI with way less compute.

Why this matters: The US banned China from accessing NVIDIA's most advanced chips. That restriction was supposed to slow down Chinese AI development.

Instead, it forced them to innovate differently.

DeepSeek's R1 reasoning model last year was built at a fraction of the cost of OpenAI's models. Same performance. Way cheaper.

Now they're gearing up for R2, expected to drop in February around Spring Festival.

Bloomberg Intelligence analysts Robert Lea and Jasmine Lyu predict R2 "has potential to upend the global AI sector again, despite Google's recent gains."

Google's Gemini 3 overtook OpenAI in November to claim a top-3 slot in LiveBench's LLM performance ranking.

But China's low-cost models already claimed two slots in the top-15.

The new paper addresses training instability and limited scalability. Tests ran on models from 3 billion to 27 billion parameters.

It builds on ByteDance's 2024 research into hyper-connection architectures.

The authors say the technique holds promise "for the evolution of foundational models."

Here's what I find interesting: Silicon Valley spent years optimizing for scale. More compute. Bigger models. More data.

China couldn't do that. So they optimized for efficiency instead.

And it's working.

When you can't brute-force a problem, you're forced to actually solve it.

That's not just an AI lesson. That's a business lesson.

The teams with unlimited resources often build slower than the teams with constraints.

Constraints force creativity. Abundance allows laziness.

DeepSeek is proving that the compute arms race isn't the only path forward.

Over to You...

Are you using AI to save money or just spending more on fancier tools?

Hit reply and share.

To AI efficiency over hype,

Jeff J. Hunter

Founder, AI Persona Method | TheTip.ai

| » NEW: Join the AI Money Group « 🚀 Zero to Product Masterclass - Watch us build a sellable AI product LIVE, then do it yourself 📞 Monthly Group Calls - Live training, Q&A, and strategy sessions with Jeff |

Sent to: {{email}} Jeff J Hunter, 3220 W Monte Vista Ave #105, Turlock, Don't want future emails? |

Reply